Blob Tracking

We want Dan-ED to operate ‘autonomously’ for as many of the challenges as possible. To achieve it we need to add a camera and implement machine vision. We already have line follower and ultrasonic sensors that provide feedback to Dan-ED, but we need specific ability to detect objects.

After a tremendous amount of

work I think we finally licked the code for Dan-ED to ‘see’!

Our machine vision code uses OpenCV. It is very simple, looking for objects based

on colour. We use the HSV colour space

as this is most robust to background lighting variations. Unlike RGB we only need to tune into a

particular ‘Hue (H)’ in order to recognise a colour.

We have decided to apply our machine vision code to the

Minesweeper challenge first as detecting a coloured square is a fundamental

aspect of the challenge. Once detected

Dan-ED must autonomously drive to the lit square to ‘defuse’ it.

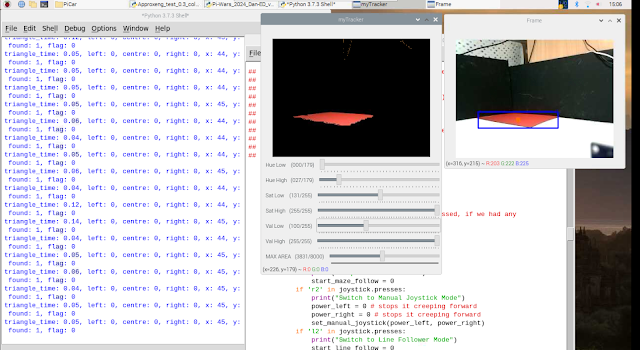

Our machine vision code, include ‘track bars’ that allow us

to ‘select’ the object to be tracked based on colour.

We placed a piece of red card on the arena floor to simulate one of the floor panels in the minesweeper challenge ‘lighting up’. With the track bars adjusted to ‘select’ for red, it worked quite well, easily picking out the red card based on the HSV values defined. We selected for H values in range 0 – 27 which corresponds to the low red range in HSV space.

However, when we tried to detect the light from a red LED light source (torch), as would be the case in the real Minesweeper challenge, we found the red light was too bright and saturated the camera. This resulted in the ‘red blob’ from the LED torch appearing as a ‘white blob’ to the camera. The solution was to add a ‘red filter’ in front of the camera and adjust to H values in range 0 – 76 to pick out the ‘white’ blob in the camera view.

This video shows what happens when we try to track the red light from the LED torch. We needed to add a filter to allow the camera to pick out the 'blob'.

Our machine vision code is able to pick out and 'track' the blob as the torch is moved around. It works quite well.

Here's Dan-ED with machine vision code implemented. Dan-ED is able to follow the red 'blob' around the arena! Its like a pet cat chasing after a ball!

Comments

Post a Comment